Regulating AI “Wokeness?”

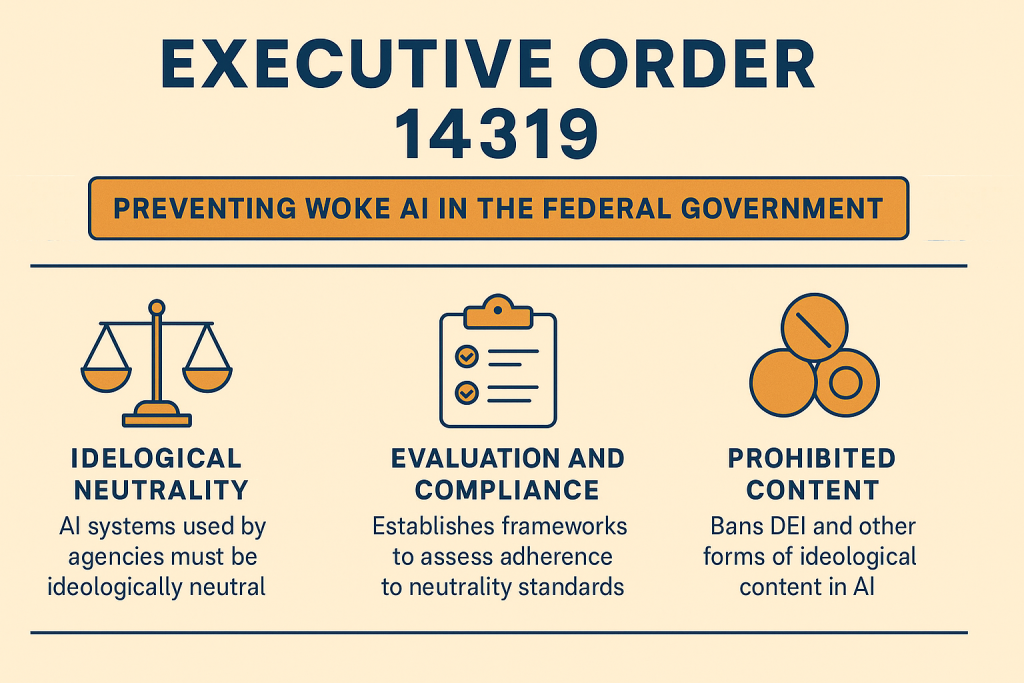

Back in July, the US unveiled new guidelines the White House said is aimed at curbing what it calls “woke” artificial intelligence (AI) in federal agencies. This move comes as US President Donald Trump proclaims Executive Order 14319, intensifying its campaign to remove “ideological bias” from government systems to align with what they describe as a “neutral, yet pro-American” technology.

The new AI integrity and neutrality framework is the Trump administration’s new directive that lays down new evaluation protocols for AI systems used in government. Immediate compliance is expected from departments including Education, Defence and Health. With this framework, agencies are mandated to remove datasets, algorithms and training approaches that allegedly promote bias rooted in identity politics, race or gender ideology – collectively known in recent years as “DEI” (Diversity, Equity, Inclusion). The order targets the removal of said approaches in developing Large Language Models like ChatGPT and Gemini.

It is a significant policy shift that will reverberate throughout not just the American government, but the view on AI on a global scale regarding how AI tools are designed, used, and evaluated in the public sector.

From Ethical Safeguards to Ideological Filters and Balancing Them

Past US administrations have placed emphasis on ethical safeguards and inclusivity in regulating AI, but this time, the Trump administration’s approach reflects a pivot towards ideological neutrality as a national priority.

Supporters of the new framework cite recent controversies involving large language models making politically charged statements when prompted with neutral queries, such as the recent controversies with X’s Grok voicing explicitly antisemitic sentiments.

Some critics warn the move risks gutting important accountability tools. They posit that stripping away context can mean risking erasing genuine disparities in access and service, given that not “everyone starts from the same place or the same perspective.”

How does this contribute to the overall view of authorities with regards to AI training? Given that the US is home to a big chunk of the world’s data centres and AI companies, what they implement regarding AI development may affect the AI industry at large.

The Problem with “Universal Take” AI

Until now, many AI systems used in government were designed with broad, “one-size-fits-all” assumptions about fairness and inclusion. Many frameworks are built by academic and corporate partners focused on social equity and modern takes on inclusivity. However, this approach on regulating AI came with its own problems especially when data or algorithms created to enhance inclusivity were perceived as overstepping into policy enforcement.

For example, an AI system used by a Department of Housing task force was criticised in 2023 for deprioritising certain grant applications because they did not mention diversity initiatives. Similarly, automated content moderation tools in federal digital platforms were flagged for filtering political speech inconsistently, leading usage to spotty findings and sometimes, even controversial results.

In response to what the White House says of these systems as “well-intentioned but lacks transparency and fails to properly represent neutrality and free expression,” the new framework promotes what it calls “values-aware AI” – a method in which government AI tools are audited for neutrality and tested against politically balanced datasets.

Reframing AI as a Public Good

Looking forward, the Trump administration’s approach invites a broader national conversation about AI’s purpose: Is it simply a tool to optimise public service delivery, or is it also a reflection of the nation’s core values?

In an age of rapid technological growth, regulating AI must evolve alongside society but without losing sight of fairness, security and public trust. As technology becomes more intertwined with governance, it is vital that agencies remain adaptable. AI systems must become both reliable and resilient, able to understand contextual nuances of changing societal needs and evolving threats and be balanced amidst shifting political climates.

“We are not calling for stagnation. We are calling for balance,” said Ellis during the press conference. “AI should help people, not divide them.”

Yet critics argue that the Trump administration’s take on regulating AI, while emphasising neutrality and resilience, risks oversimplifying the complex ethical terrain of artificial intelligence. By focusing heavily on performance metrics and ideological neutrality, some experts warn that the policy may sideline deeper concerns about systemic bias, transparency, and the need for inclusive governance.

It can be said that true neutrality in AI is a myth if the data and design processes themselves are not representative. Even generative AI can sway online deliberations and talk movements. This opens the possibility of authorities themselves using AI to reinforce policies and shape the way people think, in the long run.

Opponents also caution that framing AI as a reflection of national values can lead to politicisation of technology, where definitions of fairness and trustworthiness shift with each administration. They argue that public good should not be defined solely by efficiency or resilience, but by a commitment to equity, accountability, and democratic oversight. It is essential in regulating AI that both authorities and the public at large countercheck each other in ensuring a balanced perspective of ethics is maintained.

As the debate continues, many call for a more participatory approach to give the marginalised a voice and prioritise ethics over political expediency. In this view, the future of AI is not just about integrity, but about justice. The journey ahead requires thoughtful collaboration between technologists, policymakers and communities. It is not about choosing sides but choosing integrity.

Conclusion

As AI continues to shape the way services are delivered, information is sorted and citizens are heard, the debate over what it should do and what it should not only intensifies. Regulating AI may now become a priority in many governments as its development continues.

Singapore-based firm User Experience Researchers (USER) is taking a proactive stance in the incorporation of ethics in regulating AI development by embedding ethical principles and neutrality safeguards into when customising AI models and enterprise tools. With a strong focus on education, healthcare, and public sector applications, USER integrates the customer’s current policies and compliance requirements, ensuring that it reflects data privacy, avoids bias, and services diverse user needs. The approach combines human-centred design with data-driven insights, enabling institutions to deploy technology that is both effective and equitable.

To tackle neutrality, USER employs a comprehensive strategy that includes usability testing with representative populations, ethics checklists, and context-aware model training. By aligning technical innovation with ethical accountability, USER exemplifies how private enterprise can contribute meaningfully to the public good in the AI era.

About USER Experience Researchers Pte. Ltd.

USER is a leading UX-focused company specialising in digital transformation consultancy, agile development, and workforce solutions. We have a steadfast commitment to innovating the best of today’s technology to promote sustainable growth for businesses and industries.

For more information, contact USER through project@user.com.sg

Article by: John Idel Janolino